Jo to run local LLM on local PC without the need of a GPU

Jo is a simple AI assistant to run local LLM on local PC without the need of a GPU.

Jo is a personnel project I've started on March 2025. It's a simple tool to run local LLM on local PC without the need of a GPU. It's still on development and is not public yet.

Principle

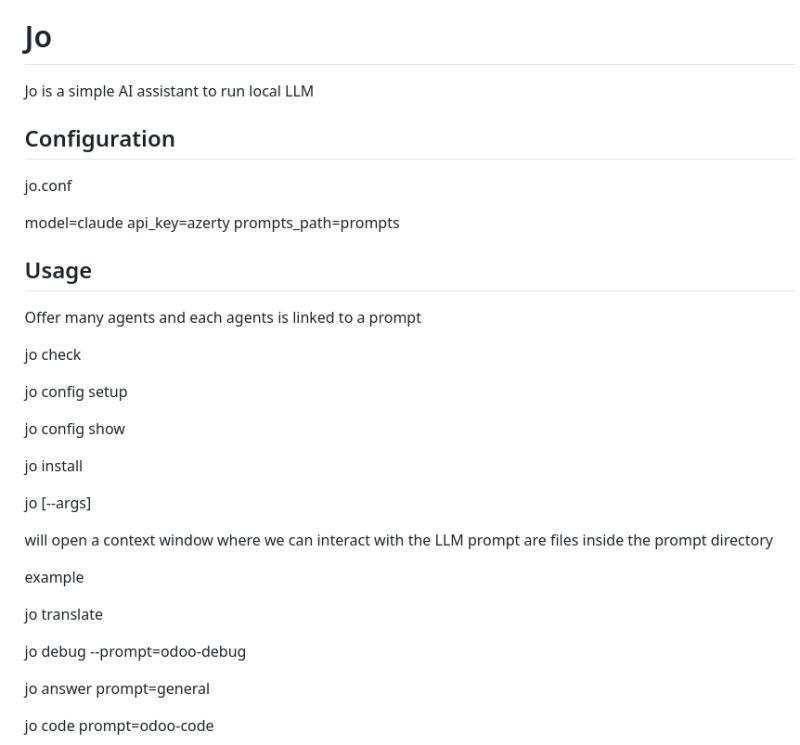

Jo offers many AI agents and each agent is linked to a prompt that can be found in the prompts folder. Actually it runs only on Mistral 2B for the translator agent and the default configurations can be found in the jo.conf file.

After the installation, you can see the configurations by running uv run jo.py config show

General usage

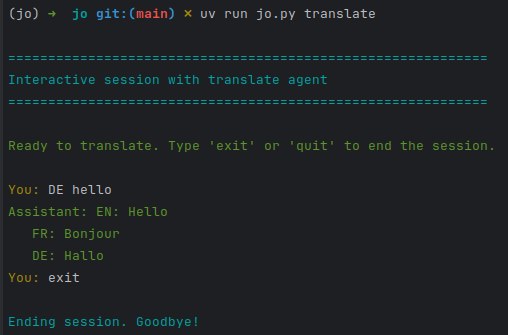

The idea of jo is to invoke a specific agent to do a specific work. As example, you can call a translator agent, a coding agent, etc. To do so, just run the following command

uv run jo.py [--args]

> SOON: jo [--args]

> jo debug --prompt=odoo-debug

> jo answer prompt=general

> jo code prompt=odoo-code

It will then open a context window where we can interact with the LLM through a dedicated prompt that is available inside the prompt directory.

For now only a translator agent is available !